Consistently apply Generative AI to every frame of a moving image with Runway Research.

Whether it’s due to its ability to harness just about any style or it can create new art with the input of a few word suggestions, AI-generated art is taking the internet by storm.

Now, the creators of Stable Diffusion have released a new technique called “Video to Video,” which applies generative AI techniques to video to create original works by using a few words to apply the style or image to the structure of original source video. It will be a powerful pre-production tool in the near future.

AI Tools Put To Good Use

“AI systems for image and video synthesis are quickly becoming more precise, realistic, and controllable,” states the Runway Research website. “Runway Research is at the forefront of these developments and is dedicated to ensuring the future of creativity is accessible, controllable, and empowering for all.”

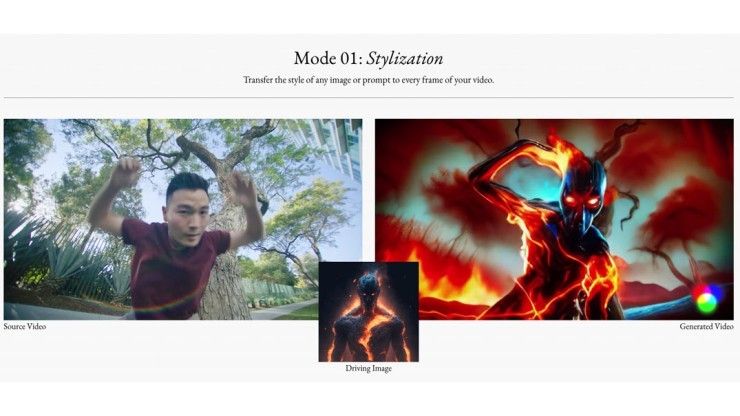

The new app is called Gen-1 by Runway, and it uses a structure and content-guided video diffusion model to edit videos based on visual or textual descriptions for a desired output. The technique, which Runway calls Video to Video, applies AI-generated features to entire videos to create otherworldly and creative works.

Today, Generative AI takes its next big step forward.

Introducing Gen-1: a new AI model that uses language and images to generate new videos out of existing ones.

Sign up for early research access: https://t.co/7JD5oHrowP pic.twitter.com/4Pv0Sk4exy

— Runway (@runwayml) February 6, 2023

AI Tools For A Variety of Image Modes

Building on the shoulders of their still image AI engines Latent and Stable Diffusion, Gen-1 is capable of consistently applying any image style and composition to every frame of a video with five separate modes, depending on the desired outcome. These modes are:

Stylization Mode: Where users can transfer the style of any image or prompt to any frame of a video.

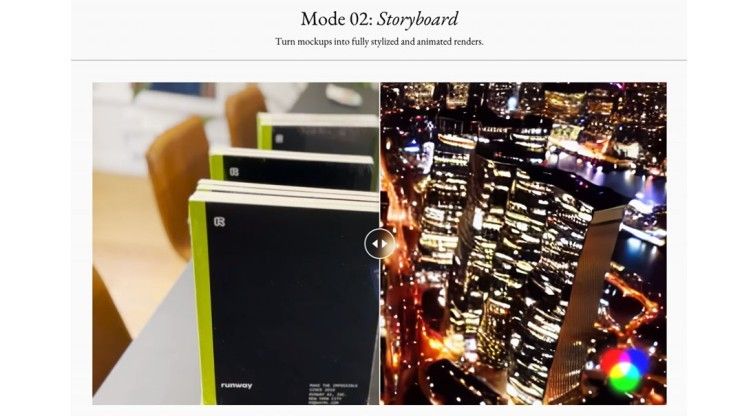

Storyboard Mode: Turning still image mockups into fully animated and stylized video renders.

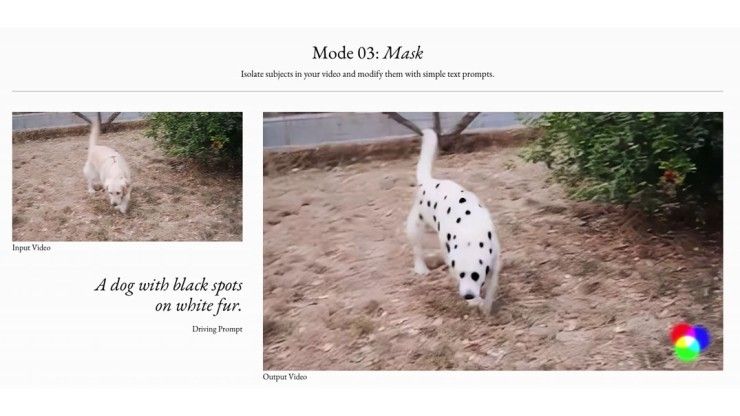

Mask Mode: Where elements of a still image can be isolated and modified without changing the element’s surroundings.

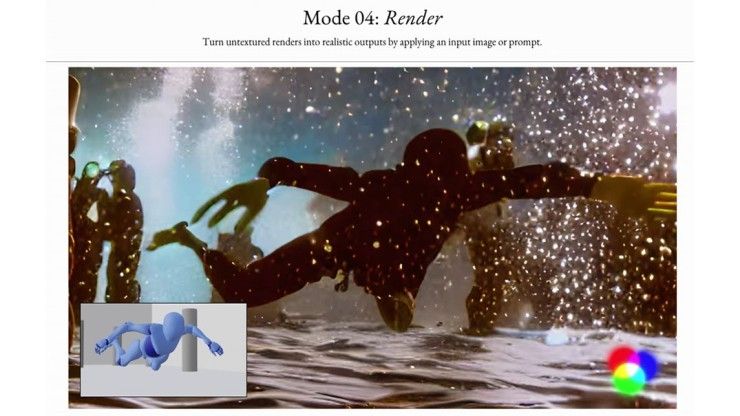

Render Mode: Where 3D renders can be animated and modified with a desired style to create realistic, animated works.

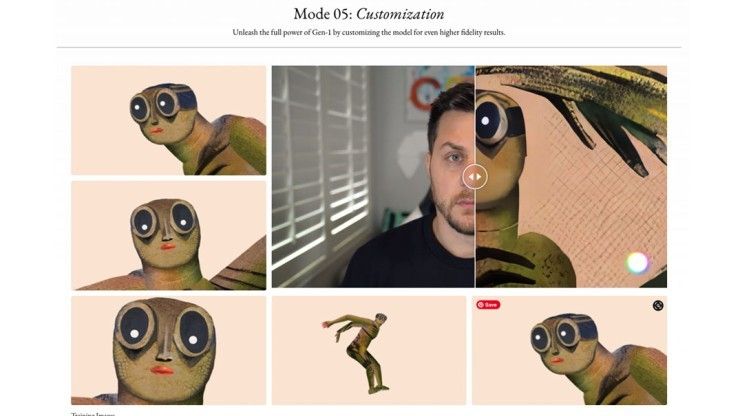

Customization Mode: Where every element can be customized further for a unique, high-fidelity original video work.

All work is done without having to build out in the physical world and still present a story idea before going before the cameras. It could be a game changer for generating pre-production animatics and testing CGI before moving to a more costly next level.

“It’s like filming something new, without filming anything at all,” states the Gen-1 website.

A Valuable Pre-Production Tool

With these powerful tools, filmmakers will be able to generate storyboards and video animatics through the use of AI to create or alter them in seconds. The Gen-1 Video to Video engine will be able to reduce the time spent creating videos for exploring new ideas and concepts without having to labor over creating complex animatics or building actual set pieces before shooting.

Look at storyboard mode. They literally just put up a few notebooks to stand in for buildings, wrote a few descriptions of what they wanted the Gen-1 AI to create, and within a few seconds, they had an animated cityscape with an artistic flair, or using 3D models that have been rigged and animated and then applying a few descriptive words to create a realistic motion video that doesn’t have to rely on expensive motion capture studios or riggings.

The Future of Creation

While many of the AI-based stories have showcased the sheer power of machine learning to create original works, the Gen-1 Video to Video AI-based tool uses that power in a way that supplements the video content creator without replacing them. As it grows in its ability to generate a video to the video image, filmmakers are sure to find new and interesting ways to create original content with it.

With Gen-1, content creators don’t need to worry that they may soon be replaced, in fact, this tool may help get them where they want to go.

To request access to the Runway Gen-1 “chatbot,” visit the Runway Research website.