Film Independent is currently in the middle of a Matching Campaign to raise support for the next 30 years of filmmaker support. All donations make before or on September 15 will be doubled—dollar-for-dollar up to $100,000. To kick off the campaign, we’re re-posting a few of our most popular blogs.

Why 24 frames per second, why not 23 or 25?

Or for that matter, why not 10 or 100?

What’s so special about seeing images 24 times per second?

The short answer: Not much, the film speed standard was a hack.

The longer answer: the entire history of filmmaking technology is a series of hacks, workarounds and duct-taped temporary-fixes that were codified, edified and institutionalized into the concrete of daily practice. Filmmaking is one big last-minute hack, designed to get through the impossibility of a shot list in the fading light of the day.

The current explosion in distribution platforms (internet, phones, VR googles) means that the bedrock standard of 24 frames per second is under attack. The race towards new standards, in both frame rate and resolution, means a whole new era in experimentation and innovation.

You are not a camera.

Truth is, cameras are a terrible metaphor for understanding how people see things. The human optic nerve is not a machine. Our retina is nothing like film or a digital sensor. Human vision is a cognitive process. We “see” with our brains, not our eyes. You even see when you’re asleep—remember the last dream you had?

Motion in film is an optical illusion, a hack of the eye and the brain. Our ability to detect motion is the end result of complex sensory processing in the eye and certain regions in the brain. In fact, there’s an incredibly rare disorder called “Akinetopsia” in which the afflicted has the ability to see static objects, but not moving ones.

Like I said, how we see things is complicated.

So, why 24fps?

Early animators and filmmakers discovered how to create the perception of motion through trial and error, initially pegging the trick somewhere between 12 and 16 frames per second. Fall below that threshold and your brain perceives a series of discrete images displayed one after the other. Go above it, and boom motion pictures.

While the illusion of motion works at 16 fps, it works better at higher frame rates. Thomas Edison, to whom we owe a lot of debt to for this whole operation (light bulbs, motion picture film, Direct Current, etc.) believed that the optimal frame rate was 46 frames per second. Anything lower than that resulted in discomfort and eventual exhaustion in audience. So Edison built a camera and film projection system that operated at at a high frame rate.

But with the slowness of film stocks and high cost of film, this was a non-starter. Economics dictated shooting closer to the threshold of the illusion, and most silent films were filmed around 16-18 frames per second (fps), then projected closer to 20-24 fps. This is why motion in those old silent films is so comical, the film is sped up: Charlie Chaplin.

A 14% temporal difference in picture is acceptable to audiences (people just move faster), in sound it’s far more noticeable and annoying. With the advent of sync sound, there was a sudden need for a standard frame rate that all filmmakers adhered to from production to exhibition.

The shutter.

Like any illusion, there is always something there that reminds us it’s not real. For motion picture, it’s the issue of a flickering shutter.

For a really interesting techie deep-dive of how this works, and how the three-bladed shutter was developed, take a look at Bill Hammack’s (aka “engineerguy”) break down of how a 16mm film projector works above. A few other things on this particular subject:

Flicker reminds us that what we’re watching isn’t real.

I love the flicker. I think this flicker is a constant reminder, on some level, that what you’re seeing is not real. Film projected in movie theaters hasn’t changed much since the widespread acceptance of color and sound in the early 1930s. Due to technical and cost constraints, we have a standard: 24 frames per second, a three bladed shutter and some dreamy motion blur, all projected as shadow and light on the side of a wall. We watch movies the way our great-grandparents did, it connects us with a shared ritual.

While there have been fads like stereoscopic 3D, extra wide-framing and eardrum-shattering sound systems, most films are still shown the same, simple way. That is, until recently.

Not “cinema” anymore?

What happens when all your images are digital, from shooting to presentation?

What happens when high-resolution displays become handheld and ubiquitous?

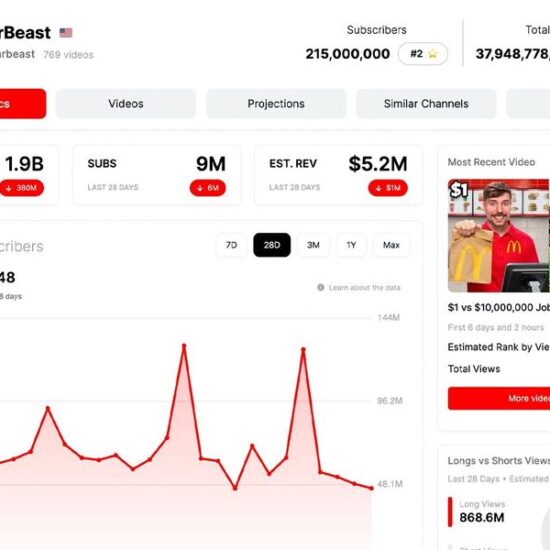

Digital cinema, decoupled from the pricey mechanical world of celluloid film stock, has allowed frame rates to explode into a crazy collection of use cases. High speed (meaning slow motion) used to mean shooting film at 120 frames per second and playing them back at 24. Now it means using an array of digital cameras working together to shoot a trillion frames per second and record light beams bouncing off of surfaces. That’s right—with digital motion picture cameras, you can literally film at the speed of light.

Alphabet Soup

In the last decade, all of the different technical innovations in digital filmmaking coalesced into one massive chemical-sounding acronym, “S3D HFR”. That means, Stereoscopic (a separate picture for each eye) 3D (creating the illusion of 3 dimensions) HFR (high frame rate, like 120 frames per second). Peter Jackson did this on the Hobbit films, the reviews were mixed.

Stepping into a movie, which is what S3D HFR is trying to emulate, is not what we do at movie theaters. This new format throws so much information at your brain, while simultaneously removing the 2D depth cues (limited depth of field) and temporal artifacts (motion blur and flicker) that we are all accustomed to seeing. It confuses us because it’s not the ritual we’re used to.

But there is a medium where high frame rates are desired and chased after: the modern video game.

Gamers want reality, they build reality engines.

From photorealistic, real-time rendering pipelines to supremely high frame rates, digital gaming systems are pushing the envelope for performance. Game engineers build systems utilizing massive parallel processing graphics engines (GPUs)—computers within the computer that exist purely to push pixels onto the screen.

Modern video games are a non-stop visual assault of objects moving at high speed, and a gaming POV that can be pointed anywhere at will by the player. All this kinetic, frenetic action requires high frame rates (60, 90, 100 fps) to keep up. As a side effect, GPU based computing also works as the processing engines for Artificial Intelligence and Machine Learning systems. A technology pioneered to let you mow down digital zombies at 120 frames per second is also why Siri answers your questions a little better.

Immersion: Where We’re Going Next

As new digital display technologies replace film projection, higher frame rates suddenly become practical and economical. And as monitors move off of walls and on to your face (because smartphones), all the cues that tell our brains that motion is an illusion will begin to break down. Moving pictures no longer appear as shadows and light on a flat wall.

The telltale flicker that reminds our unconscious mind that the picture is not real will disappear, as the very frame separating “constructed image” from “the real world” disappears into a virtual world of 360-degree immersion. Frame rate becomes a showstopper when wearing a Vive or Oculus Rift: at 30 fps you’re queasy, at 24fps you’re vomiting. The minimum frame rate for Virtual Reality systems is 60ps, with many developers aiming for 90 to 120.

The inverse of VR is Augmented Reality, when the pictures appear to run loose in the real world. Systems like Magic Leap (which has yet to come to market) and Microsoft Hololens are bringing the images off the frame and into the real world. These systems use sophisticated, real-time positional data of the users’ head, eyes and body, as well as the IDing of real world objects to blend virtual characters into our everyday lives.

The goal of these augmented reality systems is to create an experience that is indistinguishable from the real world. That some day, very soon, the illusions we used to watch on screens, flickering in the darkness, will run into our living room and tell us that we have an email.

A few things about how to make immersive cinema:

Directing in VR.

In traditional cinema, directors use shot selection, camera movement and editing to determine the pace and focus of each scene. But all those tools all go out the window (to varying degrees) when working with VR, which requires minimal cutting and camera movement so as not to disorient your viewer or make them barf.

But ,as the filmmaker, you’re still in charge of determining your audience’s points of interest. This can be done a variety of ways: blocking and motion, set layout, lighting, audio, etc. Just remember: since everything in your video will likely need to play out in longer unbroken takes without you on set, pre-production and rehearsal are your best friends. Leave nothing to chance.

What kind of camera do I need?

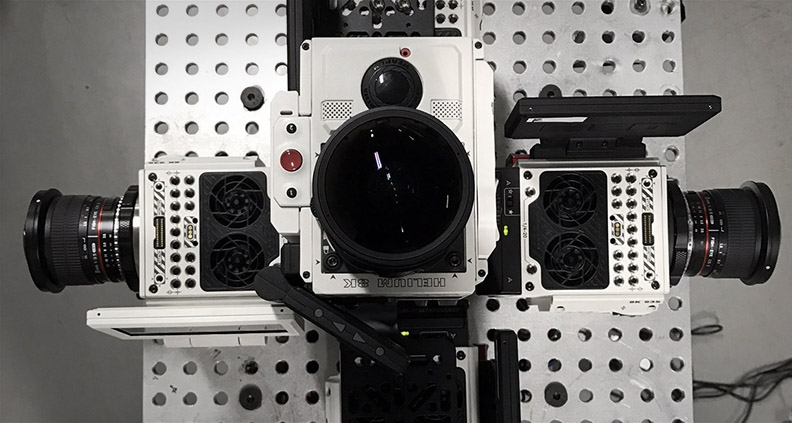

The technology used to capture and create 360/VR images is constantly evolving. But basically it’s important to understand the different kinds of 360-degree cameras out there: stereoscopic and monoscopic. Monoscopic cameras (such as Samsung’s Gear 360) use two different 180-degree lenses to capture a full 360-degree immersive video image.

These cameras are usually cheaper, with lower-end resolution and often featuring in-camera “stitching” (eg, when two or more images are joined together to create a complete 360-degree view.) They’re good for the novice VR creator to experiment and learn on.

Stereoscopic cameras—like GoPro’s Odyssey VR rig—capture multiple, higher-resolution images and frequently require the use of an outside program, like the Jump assembler, to stitch the images together. Again, consider your project and what it requires.

Workflow, audio, and other fun stuff.

Being precise about your production workflow is critical in VR, as errors made at the top of the chain can cascade down and complicate production in a variety of frustrating ways. After pre-production comes the actual shoot.

After that it’s time to stitch your images together and re-format all of your media for video editing. Luckily, recent versions of Adobe Premiere are fully equipped for 360-degree video as long as you create your new project file as such. Edit, export and test your cut in an actual VR headset. Don’t just eyeball it in your project file or as a flat, unwrapped image on your desktop.

Repeat the process until satisfied. Finally, it’s time for your end-of-chain post-work—which can include compositing, stabilization, SFX and spatialized audio. “Spatialized audio” refers to creating a stereo audio track that works in conjunction with a 360-degree image, panning tracks to track the movement of characters or objects around a 360-degree environment.

Spatialized audio tracks can be created via third-party programs (such as this workstation available from Facebook) and converted into a standard stereo audio file that can be edited in Premiere.

Film Independent promotes unique independent voices by helping filmmakers create and advance new work. To become a Member of Film Independent, just click here. To support us with a donation, click here.