With its launch of Bing AI in February, Microsoft jumped in front of what could charitably be called flat-footed competition. Google eventually got a decent version of Bard out there, but it’s still perceived as behind the Bing AI chatbot. Microsoft is about to try to lengthen that lead with broader Bing AI access and a host of powerful new features.

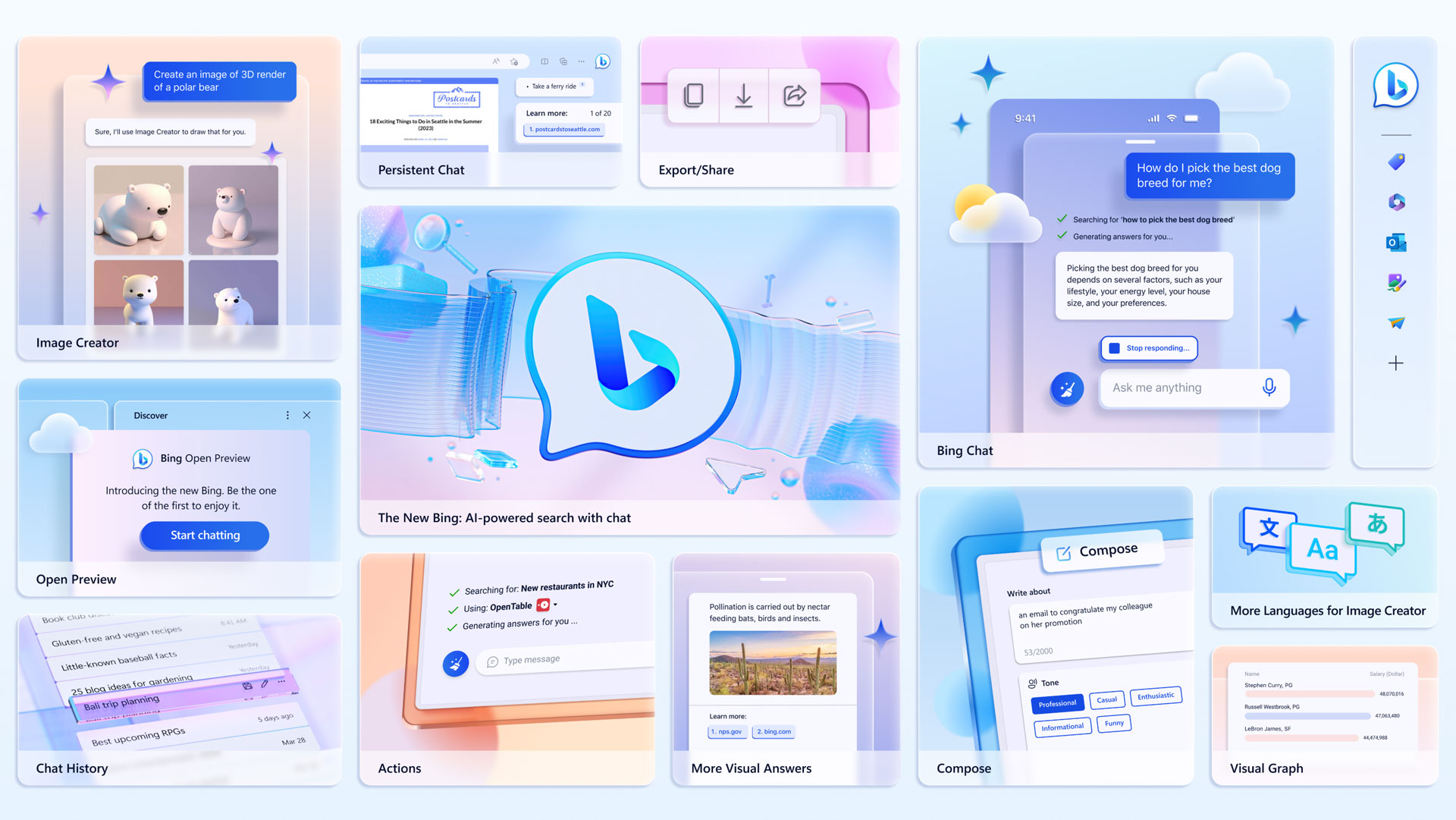

Before Microsoft adds anything, though, it’s removing one major hurdle: the waitlist. As of Wednesday (May 4), Bing AI, which taps into the Bing search engine, is an open preview, meaning anyone can try it out by signing in with Microsoft account credentials.

The Bing AI these new users will encounter today is essentially unchanged from what we first encountered at the launch in February. That, too, will soon change.

Microsoft Chief Marketing Office Yusuf Mehdi announced the update in New York, telling a small group of journalists that the initial response to Bing AI has been strong. Since launch, the GPT-4-powered Bing AI has, Mehdi said, delivered half a billion chats and 200 million people have used the platform to generate AI images.

It’s an apparent lead that Microsoft is keen to capitalize on with a multi-media-infused collection of updates that, based on what I saw in a handful of demos, may turn the act of online search on its ear.

One of the biggest updates, which Microsoft could only demonstrate with a canned but impressive video, is multimodal search. In addition to text-based queries, the new Bing AI chatbot will accept images you either paste into the interface or drag and drop from the desktop or folder.

In the demonstration I saw, Microsoft found online images of yarn animals (knit a horse or monkey), dragged one into the new Bing chat, and then typed a text prompt that asked if Bing could show it how to recreate the knitted monkey.

Bing AI knows that you’re talking about the image you just dropped in and responds appropriately with instructions. When another knitted animal image is dropped in, the query needs to only ask, “What about this one?”

Another big change coming to Bing AI is the ability to return photos and videos with text results. In our demos, we asked Bing AI why Neptune is blue, and in addition to the text explanation (something to do with its methane atmosphere), we got a nice big photo of the blue planet

When we asked Bing AI for skiing lessons, we got a bunch of YouTube videos that would play inside the chat window or in one of the new cards that pop up on top of the chat result, featuring these videos and photos.

Other updates include the ability to save all of your previous Bing AI chat sessions, which you can easily access in a sidebar window pane. Better yet, you can rename any of the sessions for clarity. Sadly, there does not appear to be a way of organizing them into topic groups.

All Bing AI chats will soon be easily exportable as text, Word files, and PDFs. You can also share your own Bing AI search journeys with other people and they will play back in the exact same way as you first saw them (it’s unclear if there’s a way to fast-forward to the completed result)

Microsoft is also enhancing the Edge browser integration. When you open a link in the updated Bing AI chat, you don’t leave the chat window behind. Instead, it moves to a redesigned sidebar pane while the opened link appears in a redesigned Edge Browser (the only design change I noted were curved corners).

There are some nifty new Bing AI chat Edge functionality integrations like an “organize my tabs” prompt that groups tabs by topics and themes. Bing AI chat can also help you find hidden Edge features like the ability to import passwords from another browser.

Microsoft also announced the addition of Bing AI plug-in support, which would allow partners like OpenTable and Wolfram to connect their products and services directly to Bing AI chat results.

Lessons learned and being responsible

Microsoft and other competitors’ race to bring AI-infused chatbot technology to the masses has sparked equal parts excitement and concern. Microsoft didn’t spend much time talking about the potential pitfalls of rapid AI adoption, but Mehdi did explain why Microsoft has been essentially testing Bing AI with the public.

“We believe that to bring this technology to market in the right and responsible way is to test out in the open, is to have people come see it and play with it and get feedback.

“I know there’s lots of talk about, ‘Hey, at what pace are you going, too fast, too slow?’ In our minds…you have to be grounded in great responsible AI principles and approach, which we’ve done for many years, and then you have to get out and you have to test that and get the feedback,” said Mehdi.

Later, I spoke to Microsoft VP of Design Liz Danzico who likened her very first experience with the raw GPT-4 to another tech epoch.

“Kind of that feeling after I saw the iPhone video, ” she explained.

As with that and other life-altering moments, Danzico wanted to call her parents and ask “Are you okay? The world is different.”

Danzico, who was involved in developing the look and feel of Bing AI’s chat interface, understands the concerns about AI and how they have to be careful about not going too far or over the line.

“We are aware of the line, and very dedicated to making sure we put arrows that point to the line for our users.”

During the development of Bing AI chat, Danzico’s team worked closely with Microsoft’s Responsible AI team to ensure that their principles were upheld throughout the product. She added that they understand “it’s an imperfect system today and we are still learning.”

The concern, of course, is not just about too much or out-of-control AI, it can also be about more mundane things, like a comprehensible interface and feature elements. Danzico told me that she was pleasantly surprised to find that users quickly understood things she assumed they would struggle over, like the slider between Bing AI search and traditional search.

They did find that Bing AI users didn’t really understand that the broom icon indicates “clear memory” of the chat conversation so they could start over, as opposed to receiving additional answers that still follow the original context.

They’re replacing the broom, she told me, with a clearer “new Chat” icon.